"LoveHater" by Carolina Rodriguez Fuenmayor

Remember when a great concern of the zeitgeist was whether playing violent video games would encourage violent behavior?

For over 50 years, intense research was dedicated to deciphering whether violence in the media can predispose viewers to violent behaviors. The 2019 answer (despite people like Trump falsely clinging to the outdated debate) is no; in fact, violent media is more likely to cause crippling trauma than indoctrinate you.

This week, The Verge‘s Casey Newton recounted interviews with 100 moderators of “violent extremism” on YouTube and Google. Based on testimonies of American-based employees (nevermind the small army of “cleaners” that tech companies amass overseas to exploit cheap labor), the litany of moderators’ documented mental health issues range from anxiety and depression to insomnia and other intense PTSD symptoms. And it’s no secret to the managers at Google and YouTube. Those who deem themselves to be “the lucky ones” are granted paid leave to address the mental health concerns that have regularly arisen among moderators who are expected to spend full work days viewing footage of child abuse (of both physical and sexual nature), beheadings, mass shootings, and other forms of extreme violence.

The banned content is divided into queues, reports The Verge. From copyright issues, hate speech, and harassment to violent extremism (VE) and adult sexual content, hundreds of moderators are contracted either in-house or through outside companies like an Austin-based outfit called Accenture. Many are immigrants who jumped at the opportunity to work for a major media company like Google. “When we migrated to the USA, our college degrees were not recognized,” says a man identified as Michael. “So we just started doing anything. We needed to start working and making money.”

Considering there are videos with disturbing content under the guise of Peppa Pig clips in order to slip into kid-friendly digital spaces, moderators do feel a sense of social responsibility and satisfaction for removing dangerous and inappropriate content from the Internet. But, of course, the company’s bottom lines don’t prioritize a safer digital space, but rather capital and ad revenue. Similar to Amazon’s notorious workers’ rights abuses, Google has imposed increasingly inhumane and bizarre restrictions on their moderators, from increasing their quotas to banning cell phones and then pens and paper from the floor and limiting time for bathroom breaks. “They treat us very bad,” Michael adds. “There’s so many ways to abuse you if you’re not doing what they like.” Michael works for Accenture, where the average pay is $18.50 or about $37,000 a year, but an in-house moderator for Google, a woman identified as Daisy, described her full-time position in the California headquarters as ideal on paper. She earned about $75,000 a year with good benefits, not including a grant of Google stock valuing about $15,000. Ultimately, she left the job with long-lasting PTSD symptoms, because, she said, “Your entire day is looking at bodies on the floor of a theater. Your neurons are just not working the way they usually would. It slows everything down.”

Specifically, a moderator’s job is to view at least 5 hours of content every day; that’s five hours of watching mass shootings, hate speech and harassment, graphic crimes against children as young as three years old, and images of dead bodies as the result of domestic and foreign terrorism (such as ISIS or a man shooting his girlfriend on camera). “You never know when you’re going to see the thing you can’t unsee until you see it,” Newton concludes from her 100 interviews. Some moderators suffered severe mental health effects after a few weeks, while others endured years before they were forced to take leave, quit, or hospitalize themselves.

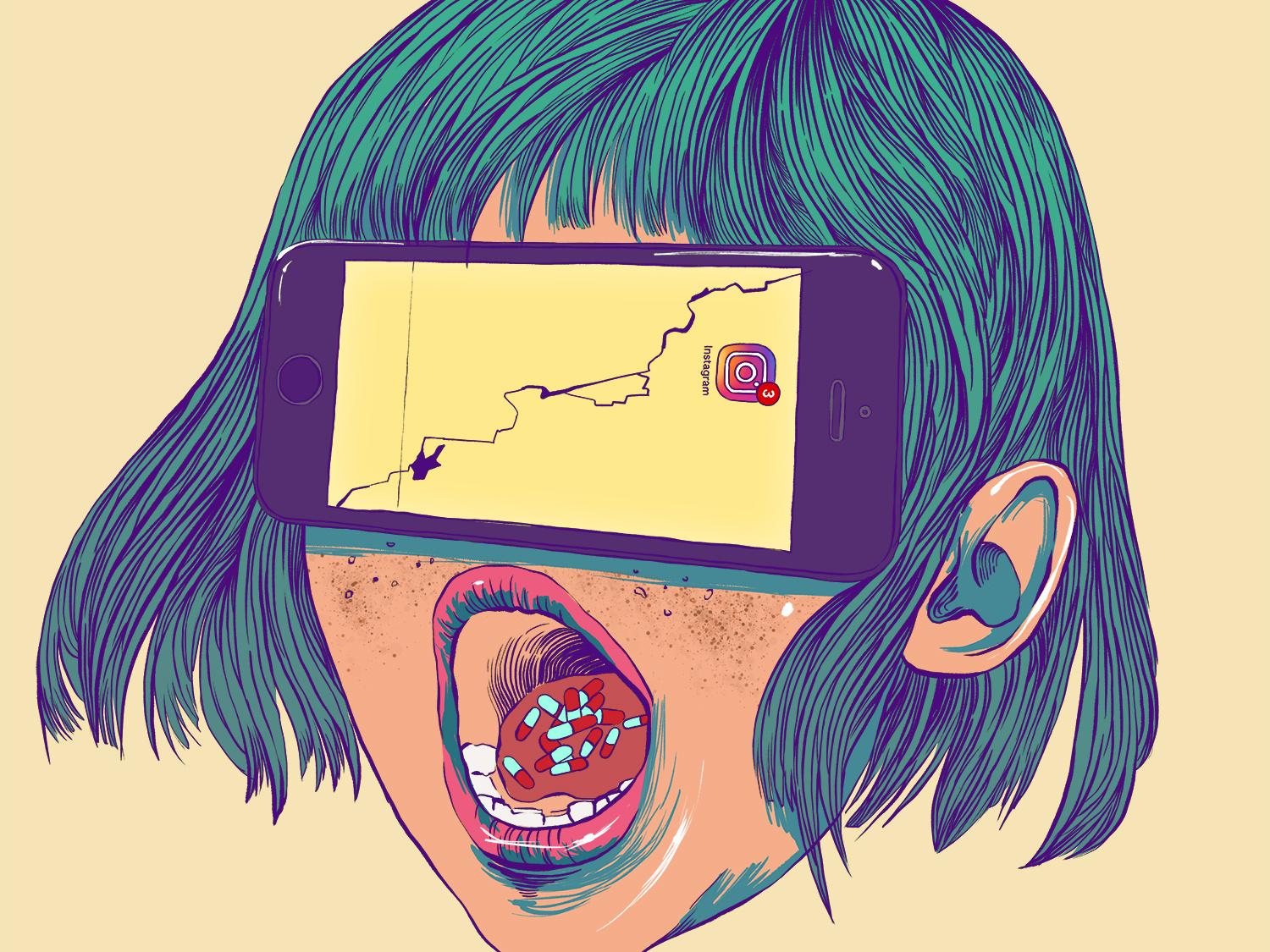

“Virus” by Carolina Rodriguez Fuenmayor

“Virus” by Carolina Rodriguez Fuenmayor

Secondhand Trauma

“Every gunshot, every death, he experiences as if it might be real,” Newton writes about one moderator’s trauma. And that’s what it is: trauma in the age of YouTube. While the human condition has been documented to bend under the weight of atrocities since ancient civilizations’ records of soldiers committing suicide, the term “posttraumatic stress disorder” was only acknowledged in the 1970s amidst the domestic fallout of the Vietnam War.

Today, studies estimate that 8 million Americans aged 18 and over display symptoms of PTSD, which is about 3.6% of the U.S. adult population. Furthermore, 67% of individuals “exposed to mass violence have been shown to develop PTSD, a higher rate than those exposed to natural disasters or other types of traumatic events,” according to the Anxiety and Depression Association of America. And the more traumatic events one is exposed to, the higher the risk of developing PTSD symptoms.

When it comes to “secondary” trauma, experiencing mental and emotional distress from exposure to another’s experience is generally associated with therapists and social workers. The contagion of secondhand trauma was already known before YouTube began in 2005, and social scientists across the board have concluded that “vicarious traumatization,” “secondary traumatic stress (STS),” or “indirect trauma” is a real, clinical effect from graphic media in the news cycle. One study found, in reference to press coverage of the 2013 Boston Marathon bombing, “Unlike direct exposure to a collective trauma, which can end when the acute phase of the event is over, media exposure keeps the acute stressor active and alive in one’s mind. In so doing, repeated media exposure may contribute to the development of trauma-related disorders by prolonging or exacerbating acute trauma-related symptoms.”

In the age of increasingly pervasive media coverage and exposure to all varieties of human behavior, secondary trauma is inevitable. Yet, among the general public it’s often unacknowledged, or even mocked. Newton recounted, “In therapy, Daisy learned that the declining productivity that frustrated her managers was not her fault. Her therapist had worked with other former content moderators and explained that people respond differently to repeated exposure to disturbing images. Some overeat and gain weight. Some exercise compulsively. Some, like Daisy, experience exhaustion and fatigue.”

“All the evil of humanity, just raining in on you,” Daisy told Newton. “That’s what it felt like — like there was no escape. And then someone [her manager] told you, ‘Well, you got to get back in there. Just keep on doing it.'”

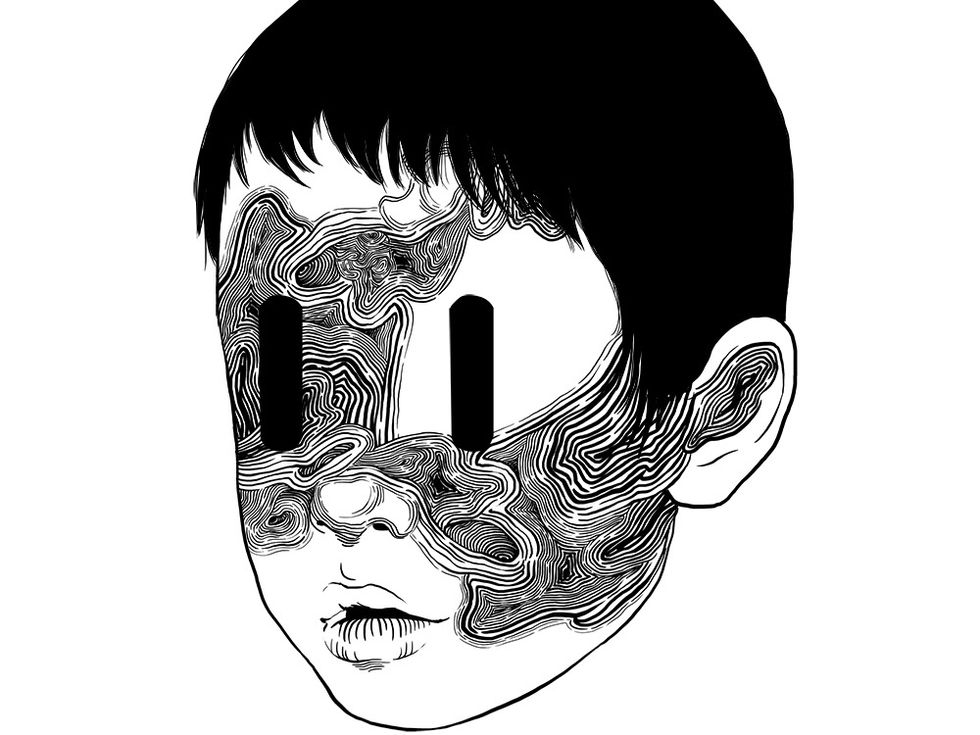

“Sorrow and Fire” by Carolina Rodriguez Fuenmayor

“Sorrow and Fire” by Carolina Rodriguez Fuenmayor

Broadcasting Trauma

What constitutes “traumatic” media? The World Health Organization has gone so far as to define “violence” for the international community: “the intentional use of physical force or power, threatened or actual, against oneself, another person, or against a group or community, which either results in or has a high likelihood of resulting in injury, death, psychological harm, maldevelopment, or deprivation.” With the average U.S. adult spending over 11 hours a day “listening to, watching, reading or generally interacting with media,” a digital user is exposed to real-world violence, global acts of terrorism, intimate partner violence (IPT), and casualties of freak accidents on a daily basis. While streaming entertainment occupies much of that time, radio reaches up to 92% on a weekly basis, while live TV “still accounts for a majority of an adult’s media usage, with four hours and 46 minutes being spent with the platform daily,” according to Nielson.

The problem with media is no longer as simple as violent video games. What streams in live news reports are increasing incidents of far-right terrorism (up 320% over the past five years) and increasing numbers of casualties. Meanwhile, shootings in the U.S. have intensified in frequency and fatalities, with gun deaths reaching the highest number per capita in more than 20 years (12 gun deaths per 100,000 people).With social media, you can view police shootouts live on Twitter, watch a mass shooter’s livestream of his attack, see fatal police brutality caught on tape, or witness someone commit suicide on Facebook.

Who’s policing this content? Instagram and Facebook are ostensibly cracking down on their community guidelines by demoting potentially injurious content—or debating before congress the limitations of both free speech and Mark Zuckerberg’s latent humanity. As of November 2019, Twitter allows some sensitive material to be placed behind a content warning, provided it serves the purpose “to show what’s happening in the world,” but bans posts that “have the potential to normalize violence and cause distress to those who view them,” including “gratuitous gore,” “hateful imagery,” “graphic violence” or adult sexual content. What happens after you hit the “report” button? At Google (and its property YouTube), it falls to the underpaid, overworked, and neglected moderators who are denied lunch breaks and vacation time if their queue has a heavy backlog of footage.

But what the Hell are we supposed to do about it? If we stumble across these images—even view some of them in full—do we become culpable for their existence?

We’ve been fretting over the human condition’s ability to withstand traumatic images since the dawn of photography, particularly after photographs of the 20th century’s World Wars exposed inhumane suffering to international audiences for the first time. “Photographs of mutilated bodies certainly can be used…to vivify the condemnation of war,” writes Susan Sontag in 2003’s Regarding the Pain of Others, “and may bring home, for a spell, a portion of its reality to those who have no experience of war at all.” She also notes, “The photographs are a means of making ‘real’ (or ‘more real’) matters that the privileged and the merely safe might prefer to ignore.” In contrast, photographer Ariella Azoulay challenges Sontag when she examines the fundamental power relations between viewer and object in her book, The Civil Contract of Photography, wherein she argues that a violent photograph demands that the viewer respond to the suffering depicted. If the role of a photograph is “creating the visual space for politics,” then how much more does a moving image demand of us? Clicking the “report” button on Twitter? Writing to our congresspeople? Taking to the streets and rioting?

In her longform essay, Sontag wrote: “Compassion is an unstable emotion. It needs to be translated into action, or it withers. The question of what to do with the feelings that have been aroused, the knowledge that has been communicated. If one feels that there is nothing ‘we’ can do–but who is that ‘we’?–and nothing ‘they’ can do either– and who are ‘they’–then one starts to get bored, cynical, apathetic.”

“This Life Will Tear You Apart” by Carolina Rodriguez Fuenmayor

“This Life Will Tear You Apart” by Carolina Rodriguez Fuenmayor

Ultimately, Google’s failure to properly respect and support the mental health of its content moderators reflects an American problem of exceptionalism and subsequent drive to optimize at all costs. What drives the average American to filter the world through their screens for half of their day is stress over keeping up with trends and current events, being the most productive, and then escaping those anxieties in their downtime: Digital space–the realm of the image–is both the crime scene and the respite. The injustice calling us to action—whether in the form of boycotts or Twitter rants—is the fact that media is being regulated by a small cohort of billion-dollar companies with little to no regard for actual human life. Governments expect tech companies to police their own services with no outside oversight, while Google, a company that made $136.22 billion in 2018, is “just now beginning to dabble in these minor, technology-based interventions, years after employees began to report diagnoses of PTSD to their managers,” according to Newton.

“It sounds to me like this is not a you problem, this is a them problem,” is what Daisy’s therapist told her. “They are in charge of this. They created this job. They should be able to … put resources into making this job, which is never going to be easy — but at least minimize these effects as much as possible.” As of this week, they’re putting (minimal) effort into that. Google researchers are experimenting with using technological tools to ease moderators’ emotional and mental distress from watching the Internet’s most violent and abusive acts on a daily basis: They’re thinking of blurring out faces, editing videos into black and white, or changing the color of blood to green–which is fitting: blood the color of money.

- YouTube Tries to Idiot-Proof Its Policy – Popdust ›

- TV’s Most Accurate Depictions of Mental Illness – Popdust ›

- “Suicide Contagion” in K-Pop: Korean Culture’s Flaws – Popdust ›

- Etika and the Struggle to Cope: Mental Illness as an Influencer … ›

- Social networks: 5 dangers of social media – IONOS ›

- Dangers – Internet Safety 101 ›

- The Dangers Of Social Media (Child Predator Experiment) – YouTube ›

- Top 8 dangers of social media for kids and teens – Care.com ›

- 8 Dangers of Social Media We’re Not Willing to Admit | RELEVANT … ›

- 5 Dangers of Social Media for Teens | All Pro Dad ›

- The dangers of social media that no one likes to admit | Op-eds … ›

- Harmful or dangerous content – YouTube Help ›

- The Trauma of Violent News on the Internet – The New York Times ›

- We Are Being Exposed to Trauma On Social Media – FLARE ›

- Can social media cause PTSD? – BBC News ›

- Viewing violent news on social media can cause trauma … ›

- Yes, You Can Experience Trauma From Consuming Social Media … ›

- ‘Secondary Trauma’: When PTSD is Contagious – The Atlantic ›

- Google and YouTube moderators speak out on the work that’s giving … ›